Simulations are becoming more and more popular in many areas, such as biology, physical traits, and drugs. As a result of computer-based modelling tools, it is now possible to study difficult physics and mathematical topics without having to do experiments. More and more, theoretical formulas are being used to make sense of testing data and predict what will happen in tough situations. These methods make it easier to build systems, store and retrieve data, and guess the physical features of materials, even nanomaterials. Quantum and classical calculations will be used in this thesis to find out about magnetic qualities and the magnetocaloric effect. These will be used in addition to methods like the Ab-initio approach and Monte-Carlo simulation.

Monte Carlo Method and Ising Model

Monte Carlo models (MCS) have been around since the early days of computers. They have helped solve problems in many areas, such as applied statistics, engineering, banking, business, design, computer science, telecommunications, the physical sciences, and more. Bayesian multi-parameter problem-solving has grown thanks to the progress made in MCS, which has led to more use of Bayesian statistics.

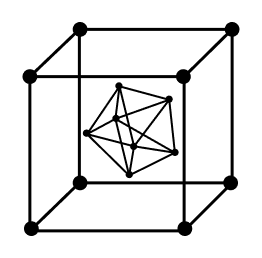

Statistical physics groups different spin models into groups based on the number of degrees of freedom and how the atoms interact with each other. About three types of spin models can be found: discrete spins, continuous spins, and arrow configurations along network lines. In this thesis, the Ising model is used to look into both the magnetic qualities and the magnetocaloric effect of systems.

In statistical mechanics, the Ising model shows ferromagnetism through math. It is named after the scientist Ernest Ising. It is made up of discrete variables that show the magnetic dipole moments of atomic spins. These variables can be in one of two states (+1 or −1). These spins are set up on a grid, which is usually in the shape of a graph. This lets them connect with spins that are close by.

When using Monte Carlo models to find average values, the results should lean towards numbers that are more likely to happen. To find the thermal mean of a number, Q, you add up the inputs from all the different system states, based on how likely each one is to happen. To fix this, the Monte Carlo method picks a group of states at random from a given distribution (pa). It is necessary to know the probability distribution (P(m)) for a set of states N = {a1,…, aM}.

The difficult part is finding the right estimate in Monte Carlo trials. The Markov process is used by Monte Carlo methods to choose which states to include. The following equation shows how the Markov chain changes over time:

d P(Sa, t) dt = −∑ a w(a – b)Pa(t – 1) +∑ b w(b – a)Pb(t – 1)

The transition odds show how likely it is that the process will go from state a to state b or vice versa, and t shows how much time has passed in the Markov process.

The produced state might not stay the same, and it looks for new states based on the chance of change w(a → b). This search is based on two conditions: the chance of the change stays the same over time, and it depends on how the system is set up in states a and b. During the Markov process, ergodicity is a necessary condition for the system to be able to change from one state to another over a long enough time. Ergodicity is not met if all the transition probabilities for a state are zero.

The exact balance condition means that the system stays in equilibrium, just like when an atom leaves equilibrium, it is replaced by an equal number of atoms that also leave equilibrium. The chance of the Boltzmann distribution is higher than that of any other distribution. To get around this problem, an extra condition called “specific balance” is described as follows:

Paw(a – b) = Pbw(b – a). This condition shows that the specific requirement for stability rules out the idea of a limit cycle. The chance distribution fits the Boltzmann equation because we are looking at a system that is in temperature equilibrium.

Standard methods that are already in place might not always be able to meet the specific needs of a new problem. This is why it is so important to make the Monte Carlo Method and Ising Model 5 methods work better for these problems. We suggest a number of Markov processes for this way of solving problems. We use the acceptance rate to find good transition probabilities from different Markov processes when it gets hard to guess which Markov process will lead to the right transition result.

This is how you figure out the chance of the transition: w(a → b) = g(a → b)A(a → b). The acceptance chance, which is also written as “acceptance,” tells us if we should agree with the state change. It’s possible for g(a → b) and g(b → a) to have different numbers. To make sure the method works well, the acceptance rate is usually set close to 1.

We will talk more about the Metropolis algorithm in the next part. Nicholas Metropolis and his coworkers at the Los Alamos laboratory in Mexico came up with it in 1953. The Markov chain is the most important part of this method. It moves a particle from state (a) to state (b) with a Boltzmann factor exp(−βEa). The repetitive structure of the Metropolis algorithm lets you explore and sample the system’s state space to figure out features that are important to you.

Ab-Initio Calculations

The Ab-initio method tries to guess the magnetic, electronic, and optical qualities of a material by solving quantum mechanical equations that don’t have any factors that can be changed. Density functional theory (DFT), which is based on electron density and is commonly used for quantum predictions about electronic structures in solid-state materials, is a useful tool for this. DFT is the best way to study the physical features of solids’ ground states because it lets scientists figure out the ground state of systems with many electrons.

The time-independent Schrödinger equation, written as H·° = E¨, can be used to study the features of materials. This equation gives us a basic way to think about how quantum objects behave in a physical system. It tells us about their energy levels and wave functions. In quantum physics, solving this equation is very important because it helps us understand and predict how particles and systems will behave at the atomic and subatomic levels.

What is the total Hamiltonian of a system with many particles interacting with each other? It is the sum of the total kinetic energy operator T and the sum of the coulombic interactions operator V. You can write the whole Hamiltonian as H¦ºT = −h2»K∑ i¦.Σεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεεε

Several Approximations

Born–Oppenheimer Approximation

Born and Oppenheimer came up with the Born-Oppenheimer approximation in 1927. It was the first big approximation to split the movements of electrons and atoms within a molecule or system. Because electrons are lighter than atoms and move more slowly, they are farther apart. The system’s total Hamiltonian changes into a Hamiltonian that only depends on electronic coordinates. This makes the Schrödinger equation easier to understand. However, this estimate doesn’t fully solve the problems that come up when electrons connect with each other in a system. Different approaches, like the Hartree approximation, the Hartree-Fock approximation, and Density Functional Theory (DFT), have been put forward to help solve these problems and make quantum calculations possible for a lot of different systems. These extra estimates give us different levels of intelligence and computing efficiency to deal with the complexities of multi-electron systems. They have been very helpful in improving our knowledge of the features of molecules and materials.

Hartree Approximation

The Hartree assumption, which holds that electrons are independent, is used to solve the many-electron problem in quantum physics. Each electron moves in a field that is made up of all the other electrons and the nuclei. The total wave function (Ψ) for a system with more than one electron can be thought of as the sum of the wave functions for each electron separately (ψi). It is possible to find the Schrödinger equation for each wave function ψi. This equation shows the electronic energy levels connected to a certain electronic state or orbital. It’s possible to write down the potential of the electron-nucleus interaction as VN−e(r →) = −Ze2∑R1 and the effective potential (Veff) that the electron feels when it interacts with the nucleus and other electrons. You can write the effective potential as Vef f (r →) = VH (r →) + VN (r →). In the Hartree-Fock method, an extra fix is added to the Hartree estimate.

Hartree–Fock Approximation

A basic idea in quantum physics is the Hartree-Fock assumption. It is based on the Pauli exclusion principle, which says that two or more identical fermions cannot be in the same quantum state at the same time in the same space. This rule is important for understanding the antisymmetric of wave functions and can be used for fermions like electrons, protons, and neutrons. The Schrödinger equation, which is shown by the Slater determinant, is changed by adding the antisymmetric wave function. The exchange term, which takes into account the antisymmetric wave function as needed by the Pauli exclusion principle, is the main difference in the Hartree-Fock method. This exchange term gives a more accurate picture of the structure of electrons because it takes into account relationships between electrons that aren’t taken into account in the simpler Hartree model. The Hartree-Fock method is harder to solve computationally, though, because it adds more complexity.

Density Functional Theory

The DFT algorithm, which was created by Hohenberg, Kohn, and Sham, is a powerful way to figure out the electronic structure of things in solid-state physics and quantum chemistry. It looks at the electron density, which is shown as ρ(→r), to figure out the electric features of a system. DFT uses different theories and methods to solve the Schrödinger equation. This theory by Kohn and Sham built on the work of Thomas and Fermi to make it easier to solve the electronic structure problem. Dirac added exchange-correlation effects by fixing local exchanges. These effects took into account electron interactions and correlations, making the basis for DFT calculations more accurate.

Theorems of Hohenberg and Kohn

For a group of n electrons that are interacting, the Hamiltonian can be written as H = T + V + V, where T is the kinetic energy, V is the interelectronic repulsions, and V is the external potential due to the nuclei. The ground-state wave function, Ψ0 = Ϻ(ρ0), is a unique function of the electron density, as shown by Hohenberg and Kohn. The functional density affects any measurable O. The system’s total energy is shown by EV (ρ) = FHK (ρ) + Vext (ρ). FHK (ρ) = T (ρ) + Vee(ρ), and Vext (ρ) = ∫ V¦ext (r)π(r)dr.

The Hohenberg-Kohn theories are about systems with moving electrons that are affected by an outside potential. So, they say that the external potential is the only thing that can change the system’s ground-state electron density (ρ(→r)), and the system’s ground-state energy (E0) is a unique function of the ground-state electron density (E0[ρ(→r)]. This means that the ground-state energy can be shown only as a function of the electron density, and when the real ground-state electron density is used, it is at its lowest.

If you have a system of particles interacting in an external potential V Δ ext (r), this potential is only set by the density of the ground state ρ0(r →). There is a way to make an energy function E(ρ) that works for any external potential V ext (r) and is based on density V ¦ ext (r). The minimal point of this function is the exact ground-state energy of the system, and the density that makes it the minimal point is the exact ground-state density ρ0(r →).

To sum up, Hohenberg and Kohn’s theories are very important for correctly figuring out the total energy and ground-state features of a system made up of moving electrons that are affected by an outside potential.

Formulation of Kohn–Sham

The Kohn-Sham version is one of the most important ideas in density functional theory (DFT). It replaces the complicated electron system that interacts with the complex auxiliary system that does not interact. This method makes it easier to figure out the features of electrons. The system’s energy, which is made up of electrons that don’t interact with each other, can be written as EV (ρ) = Ts(ρ) + Vext (ρ) + Ve(ρ) + Exc(ρ). The kinetic energy of electrons that are not interacting is given by Ts(ρ), the Coulomb energy of the interaction between electrons is given by Vext(ρ), and the exchange correlation energy is given by Exc(ρ).

It’s written as [Ψ∇2 + Vef f (r)], where Ψi (r) = εi Ψi (r) and Ou Vef f (r) = Vext (r) + VH (r) + Vxc(r). The Hartree potential is shown by VH (r) = ∫ ρ(r’)|r−r’|dr, and the exchange correlation potential is shown by Vxc(r).

The creation of the Kohn-Sham equations shows that the only thing that is still unknown is the exchange-correlation functional. This is a key part of DFT that includes electron-electron exchange and correlation effects.

Locale Density of Approximation

The Local Difference Approximation (LDA) is a fast way to compute that can accurately capture electron-electron correlation effects. However, it might not work well in systems where electrons are strongly localised or molecules where charge densities change a lot. More complex approximations, such as the Generalised Gradient Approximation (GGA) and mixed functionals, have been made to make it more accurate. The exchange correlation energy, which is part of the total system energy, can be added from each part of the gas that isn’t uniform as if it were uniform in that area. The exchange correlation energy for a density ρ(r →) is given by E L D A xc [ρ(r →)] = ∫ ∈L D A xc [ρ(r →)]ρ(r →)dr →. But this function can’t look at all systems where the electron density changes a lot with position (r→), so a different estimate is used.

Generalized Gradient Approximation

GGA functionals were created to make it easier to describe chemical bonds, charge density patterns, and electronic qualities, especially in situations where electron density changes over space. They are especially useful for molecules or materials that are complicated or have electrons that are spread out in different ways. Differential Equation Theory (DFT) uses GGA a lot because it gives more accurate predictions of electrical qualities in a wider range of systems than the easier LDA estimate. The GGA function can be written as: E GG A xc [ρρ(r →)] ≈ ∫ ∈GG A xc [Ψρ(r →), |∇[ρ(r →)] ρ(r →)dr →. In an electrical system that isn’t uniform, this new formula gives the exchange correlation energy term for each particle. The improvement of these techniques has made DFT more useful for systems with van der Waals forces, such as those with complicated chemical structures, stacked materials, and adsorption.

Conclusion

A lot of scientific research and computer studies use both Monte Carlo and Ab-initio simulations because they help researchers understand and predict how physical and mathematical systems will behave.

Leave a Reply